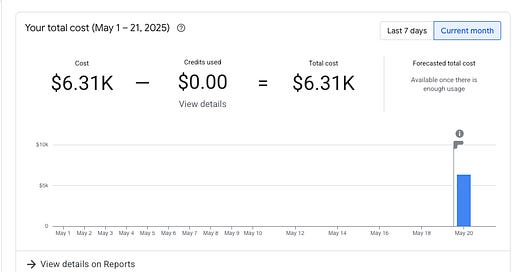

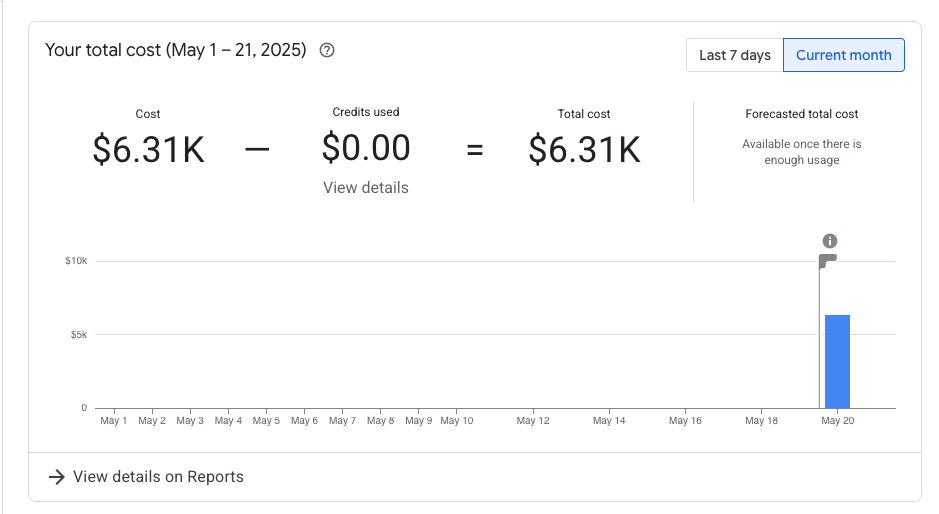

Yesterday, I may have done a woopsie (🙂). On a new google cloud project for some data analytics I ran a DBT project on some tables where 1) I didn’t understand the pricing and 2) didn’t understand the tables sizes. Google Cloud Bigquery pricing isn’t real-time, so the queries happened in the afternoon, I realized around 4pm (and stopped querying those tables), and by 10pm I had a $6.3k bill. Fun stuff.

What most non-engineers don’t realize is that mistakes like this are fairly common. At large companies, I’d estimate ~5-10% (or more) of cloud spend is erroneous and just gets forgiven as cost of doing business (that’s what I did here).

Startups like antimetal and pump are attacking this problem at the root level of instances (where most cloud cost in the world goes), but there’s a long tail of products on these clouds that are still ultra-expensive that cause issues like this every day for the best engineering organizations in the world.

The two biggest areas (from being active on the engineering side) are data and metrics / logging. Most big companies have 8 figure snowflake / databricks / warehouse bills even simple tools that optimize queries or catch when you’re querying large tables with high throughput are massively helpful when trying to optimize these costs. Metrics and logging - a single log in the right hot path can be hundreds of thousands of dollars a month.

I can see why most startups don’t focus here (not a venture-scale market), but an incredible area to build a business in my opinion.

LLM APIs are probably another good area now that I think about it